Monday, 7 October 2013

UNIT 1 Coherent and non coherent communication Answers

Part A

1. Differentiate Coherent and Non Coherent Receivers

Ans : inCoherent detection requires carrier phase recovery at the receiver and hence, circuits to perform phase estimation . Sources of carrier-phase mismatch at the receiver: inPropagationtalking causes carrier-phase offset in the received signal. inThe oscillators at the receiver which generate the carrier signal, are not usually phased locked to the transmitted carrier.

coherent detection:Huge need for a reference in phase with the received carrier

inLess complexity compared to incoherent detection at the price of higher error rate.

Coherent ( synchronous ) detection: in coherent detection , the local carrier generated at the receiver in phase locked with the carrier at transmitter

Non coherent ( envelope ) detection : this type of detection does not need receiver carrier to be phase locked with transmitter carrier

2. Define Rayleigh Channel

ans :

1. Differentiate Coherent and Non Coherent Receivers

Ans : inCoherent detection requires carrier phase recovery at the receiver and hence, circuits to perform phase estimation . Sources of carrier-phase mismatch at the receiver: inPropagationtalking causes carrier-phase offset in the received signal. inThe oscillators at the receiver which generate the carrier signal, are not usually phased locked to the transmitted carrier.

coherent detection:

inLess complexity compared to incoherent detection at the price of higher error rate.

Coherent ( synchronous ) detection: in coherent detection , the local carrier generated at the receiver in phase locked with the carrier at transmitter

Non coherent ( envelope ) detection : this type of detection does not need receiver carrier to be phase locked with transmitter carrier

2. Define Rayleigh Channel

ans :

- Rayleigh channel is a communications channel having a fading envelope in the form of the Rayleigh Probability Density Function.

Rayleigh fading models assume that the magnitude of a signal that has passed through such a transmission medium (also called a communications channel) will vary randomly, or fade, according to a Rayleigh distribution — the radial component of the sum of two uncorrelated Gaussian random variables.

3. Minimum distance for decoding for an optimum waveform reciever

ans :4.Define Optimum M- FSK Receiver

The tones are symmetrically spaced around the carrier fc which can be easily modeled byr(t) = sqr root ( 2P) cos (2 *pi *fc + 2 * pi ( K/T ) t + θ ) n (t)

. the optimum reciever is M - array non coherent receiver where various signals are the corresponding sinusoids

5 . Define Sub Optimum M-FSK Receiver

Sunday, 6 October 2013

UNIT II SPECTRAL ESTIMATION

2 marks

1. Define spectrum estimation.

2. What are the applications of spectral estimation?

3. State nonparametric methods and mention its various methods.

4. Define periodogram.

5. Illustrate the filter bank interpretation of the periodogram.

Part B

1. Explain how the Periodogram is used for spectrum estimation and Describe the performance of the

Periodogram. (12)

2. Describe Barlett’s method and Welch method of spectrum estimation. (12)

1. Define spectrum estimation.

2. What are the applications of spectral estimation?

3. State nonparametric methods and mention its various methods.

4. Define periodogram.

5. Illustrate the filter bank interpretation of the periodogram.

Part B

1. Explain how the Periodogram is used for spectrum estimation and Describe the performance of the

Periodogram. (12)

2. Describe Barlett’s method and Welch method of spectrum estimation. (12)

UNIT I DISCRETE RANDOM SIGNAL PROCESSING answers

1. Define discrete random process.

ans: A discrete time random process is a collection or ensemble of discrete time signals . A discrete time random process is a mapping from sample space Ω in to the set of discrete time signals x(n) .

2. When a random process is called as wide-sense stationary?

ans : A random process x(n) said to be wide sense stationary if the following conditions are satisfied

* The mean of the process is a constant Mx(n) = Mx

* The auto correlation rxx(k,l) depends only on the difference K-l

* The variance of the process is finite , Cx(0) < ∞

3. State Wiener – Khintchine relation.

ans : It states that the autocorrelation function of a wide-sense-stationary random process has a spectral decomposition given by the power spectrum of that process

Let X(n) be a real signal then rxx(l) <-> Sxx(ω)

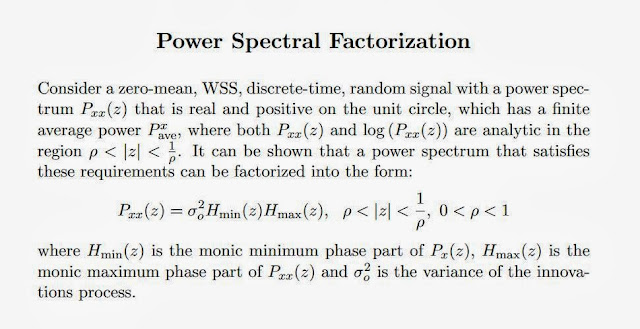

4. State spectral factorization theorem.

ans:

5. Write down the properties of regular process.

ans : *any regular process may be realized as the output of a casual and stable filter that is driven by white noise having variance σ^2 * the inverse filter 1/H(z) is a whitening filter , that is if x(n) is filtered with 1/H(z) then the output is a white noise with variance σ^2

*since ν(n) and X(n) are related by in-veritable transformation ,either process may derived from the other ,therefore both contains same information

6. When a wide-sense stationary process is said to be white noise?

ans:

ans: A discrete time random process is a collection or ensemble of discrete time signals . A discrete time random process is a mapping from sample space Ω in to the set of discrete time signals x(n) .

2. When a random process is called as wide-sense stationary?

ans : A random process x(n) said to be wide sense stationary if the following conditions are satisfied

* The mean of the process is a constant Mx(n) = Mx

* The auto correlation rxx(k,l) depends only on the difference K-l

* The variance of the process is finite , Cx(0) < ∞

3. State Wiener – Khintchine relation.

ans : It states that the autocorrelation function of a wide-sense-stationary random process has a spectral decomposition given by the power spectrum of that process

Let X(n) be a real signal then rxx(l) <-> Sxx(ω)

4. State spectral factorization theorem.

ans:

5. Write down the properties of regular process.

ans : *any regular process may be realized as the output of a casual and stable filter that is driven by white noise having variance σ^2 * the inverse filter 1/H(z) is a whitening filter , that is if x(n) is filtered with 1/H(z) then the output is a white noise with variance σ^2

*since ν(n) and X(n) are related by in-veritable transformation ,either process may derived from the other ,therefore both contains same information

6. When a wide-sense stationary process is said to be white noise?

ans:

7. Write autocorrelation and autocovariance matrices.

ans :The autocorrelation matrix is used in various digital signal processing algorithms. It consists of elements of the discrete autocorrelation function, arranged in the following manner:

arranged in the following manner:

ans :The autocorrelation matrix is used in various digital signal processing algorithms. It consists of elements of the discrete autocorrelation function,

arranged in the following manner:

arranged in the following manner:

autocovariance is the expected value of the ith entry in the vector X. In other words, we have

![\Sigma

= \begin{bmatrix}

\mathrm{E}[(X_1 - \mu_1)(X_1 - \mu_1)] & \mathrm{E}[(X_1 - \mu_1)(X_2 - \mu_2)] & \cdots & \mathrm{E}[(X_1 - \mu_1)(X_n - \mu_n)] \\ \\

\mathrm{E}[(X_2 - \mu_2)(X_1 - \mu_1)] & \mathrm{E}[(X_2 - \mu_2)(X_2 - \mu_2)] & \cdots & \mathrm{E}[(X_2 - \mu_2)(X_n - \mu_n)] \\ \\

\vdots & \vdots & \ddots & \vdots \\ \\

\mathrm{E}[(X_n - \mu_n)(X_1 - \mu_1)] & \mathrm{E}[(X_n - \mu_n)(X_2 - \mu_2)] & \cdots & \mathrm{E}[(X_n - \mu_n)(X_n - \mu_n)]

\end{bmatrix}.](http://upload.wikimedia.org/math/5/8/5/58572fa5b05e778f5a5eff9ec1b3ddb6.png)

- 8. Write the properties of autocorrelation matrix.

Property 1 : Autocorrelation matrix of a wss process is clearly a Hermitian matrix and a Toeplitz matrix

Property 2. Autocorrelation matrix of a wss process is non negetive definite Rx > 0 - Property 3 : The eigen values λk of Autocorrelation matrix of a wss process are real valued and non negetive

- 9. What are Ensemble averages?

10 . For given two random processes, x(n) and y(n), define cross-covariance and cross-correlation.

ans :

UNIT I DISCRETE RANDOM SIGNAL PROCESSING

PART A

1. Define discrete random process.

2. When a random process is called as wide-sense stationary?

3. State Wiener – Khintchine relation.

4. State spectral factorization theorem.

5. Write down the properties of regular process.

6. When a wide-sense stationary process is said to be white noise?

7. Write autocorrelation and autocovariance matrices.

8. Write the properties of autocorrelation matrix.

9. What are Ensemble averages?

10. For given two random processes, x(n) and y(n), define cross-covariance and cross-correlation.

11. When two random processes are said to be orthogonal?

12. Write the properties of Wide Sense Stationary.

13. Write the power spectrum of a WSS process filtered with linear shift-invariant filter.

14. What is a regular process?

15. Write Autocorrelation of a Sum of Processes.

PART B

1. Derive the power spectral density of the process. (10)

2. State and prove Parseval’s theorem. (8)

3. Explain Autocorrelation and Autocovariance matrices.Also explain its properties.(10)

4. Explain in detail about parameter estimation: Bias and Consistency. (10)

5. Explain Spectral factorization. What is regular process? State the properties of regular

process. (12)

6. Explain filtering random processes. (12)

1. Define discrete random process.

2. When a random process is called as wide-sense stationary?

3. State Wiener – Khintchine relation.

4. State spectral factorization theorem.

5. Write down the properties of regular process.

6. When a wide-sense stationary process is said to be white noise?

7. Write autocorrelation and autocovariance matrices.

8. Write the properties of autocorrelation matrix.

9. What are Ensemble averages?

10. For given two random processes, x(n) and y(n), define cross-covariance and cross-correlation.

11. When two random processes are said to be orthogonal?

12. Write the properties of Wide Sense Stationary.

13. Write the power spectrum of a WSS process filtered with linear shift-invariant filter.

14. What is a regular process?

15. Write Autocorrelation of a Sum of Processes.

PART B

1. Derive the power spectral density of the process. (10)

2. State and prove Parseval’s theorem. (8)

3. Explain Autocorrelation and Autocovariance matrices.Also explain its properties.(10)

4. Explain in detail about parameter estimation: Bias and Consistency. (10)

5. Explain Spectral factorization. What is regular process? State the properties of regular

process. (12)

6. Explain filtering random processes. (12)

Subscribe to:

Posts (Atom)

![\mathbf{R}_x = E[\mathbf{xx}^H] = \begin{bmatrix}

R_{xx}(0) & R^*_{xx}(1) & R^*_{xx}(2) & \cdots & R^*_{xx}(N-1) \\

R_{xx}(1) & R_{xx}(0) & R^*_{xx}(1) & \cdots & R^*_{xx}(N-2) \\

R_{xx}(2) & R_{xx}(1) & R_{xx}(0) & \cdots & R^*_{xx}(N-3) \\

\vdots & \vdots & \vdots & \ddots & \vdots \\

R_{xx}(N-1) & R_{xx}(N-2) & R_{xx}(N-3) & \cdots & R_{xx}(0) \\

\end{bmatrix}](http://upload.wikimedia.org/math/6/9/8/698a6881df8baf8fa22ab6b6173b18a4.png)